Creating Images from Audio with AI Tools

Transform sound into stunning visuals with cutting-edge AI technology

AI has revolutionized the way you can merge sound and visuals. By transforming audio into images, it bridges two sensory worlds through advanced machine learning techniques. This process involves analyzing sound frequencies and patterns to create visual representations. For example, researchers have used AI models to generate high-resolution visuals from audio inputs, showcasing the potential of this technology. The creative industry has embraced AI, with 83% of professionals already integrating it into their workflows. Tools like an AI image generator from audio open doors to innovative applications in art, education, and entertainment.

Can AI Generate Images from Audio?

The Concept of Audio-to-Image Generation

Audio-to-image generation represents a groundbreaking intersection of sound and visuals. This process involves converting audio signals into visual representations, often using advanced algorithms. AI tools analyze audio inputs, such as speech or music, and translate them into images that reflect the essence of the sound. For instance, researchers have developed systems capable of creating high-resolution visuals from audio clips. These visuals may include abstract patterns, spectrograms, or even artistic interpretations of the sound.

This concept has revolutionized how data is interpreted. By bridging auditory and visual mediums, it allows you to experience sound in a completely new way. Whether you are an artist, educator, or technologist, this technology opens up endless possibilities for creative expression and practical applications.

AI Adoption in Creative Industries

The following chart shows the percentage of professionals using AI tools in different creative fields:

How AI Interprets Sound Frequencies and Patterns

AI interprets sound by breaking it down into frequencies and patterns. Every sound consists of unique waveforms, which AI tools analyze to extract meaningful data. For example, a song contains varying pitches, rhythms, and tones. AI models identify these elements and map them to corresponding visual features. This mapping process often involves converting audio into spectrograms, which are visual representations of sound frequencies over time.

Some AI systems go a step further by embedding audio data into machine learning models. Tools like Wav2Vec 2.0 process audio signals to understand their structure. These insights enable the creation of visuals that align with the mood, tone, or rhythm of the sound. By interpreting sound patterns, AI can generate images that feel intuitive and connected to the original audio.

"AI tools can extract insights from various audio signals, enabling innovative applications in sound recognition and visualization."

The Role of Machine Learning in Creating Visual Outputs

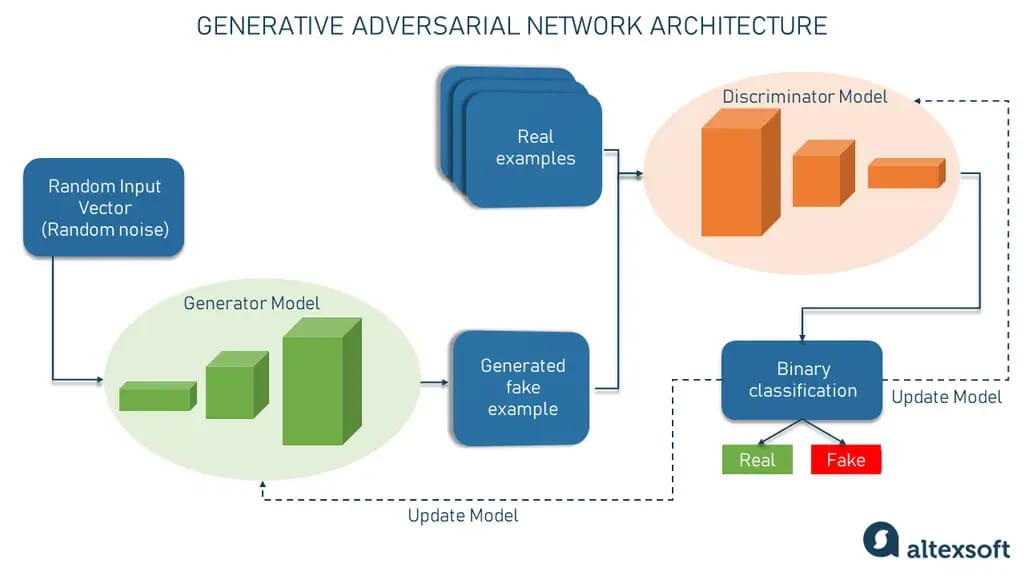

Machine learning plays a central role in audio-to-image generation. It powers the algorithms that transform sound into visuals. An image generation model, for instance, learns from vast datasets of audio and corresponding images. Through training, the model identifies patterns and relationships between the two mediums. This learning process allows the AI to generate visuals that accurately represent the input audio.

Generative AI models, such as Transformers, are often used in this process. These models excel at understanding complex data relationships. They can create original content, including images, based on audio inputs. Some tools even use descriptive text as an intermediary step. The AI converts audio into text, then uses the text to generate images. This approach enhances the accuracy and creativity of the final output.

Machine learning ensures that the visuals produced are not random but meaningful. It enables you to harness the power of AI for tasks ranging from artistic projects to educational tools. By leveraging these technologies, you can explore new ways to visualize sound and expand your creative horizons.

Audio-to-Image Generation Process

Below is a visualization of how AI transforms audio into images:

flowchart LR

A[Audio Input] --> B[Frequency Analysis]

B --> C[Pattern Recognition]

C --> D[Feature Mapping]

D --> E[Visual Generation]

E --> F[Image Output]

classDef orange fill:#FF8000,stroke:#333,stroke-width:1px,color:white;

classDef blue fill:#42A5F5,stroke:#333,stroke-width:1px,color:white;

class A,F orange;

class B,C,D,E blue;

Beginner's Guide to AI Audio-to-Image Generation

Understanding the Basics

Audio-to-image generation may sound complex, but understanding its foundation makes it approachable. At its core, this process involves converting audio signals into visual outputs. AI tools analyze sound elements like pitch, rhythm, and frequency to create images that represent the essence of the audio. For example, researchers have trained AI models using paired audio and video data collected from cities worldwide. These models learned to generate high-resolution visuals based solely on sound inputs.

To begin, you should familiarize yourself with spectrograms. A spectrogram is a visual representation of sound frequencies over time. It serves as a bridge between audio and visuals, helping AI tools interpret sound in a way that can be translated into images. Many image generation tools rely on spectrograms as a starting point for creating visuals.

Understanding the role of machine learning is also essential. AI models learn from large datasets, identifying patterns and relationships between audio and images. This learning process enables the AI to produce visuals that align with the mood or tone of the sound. By grasping these basics, you can better appreciate how audio-to-image technology works and how to use it effectively.

Step-by-Step Process for Beginners

If you're new to audio-to-image generation, follow these steps to get started:

-

Choose an AI Tool

Select an AI tool designed for audio-to-image generation. Look for tools that are beginner-friendly and offer clear instructions. Some tools may require you to upload audio files, while others might allow real-time audio input. -

Prepare Your Audio File

Ensure your audio file is ready for processing. Use high-quality recordings to achieve better results. If possible, trim the audio to focus on the specific segment you want to visualize. -

Convert Audio to Spectrogram

Many tools automatically convert audio into spectrograms. However, you can use free software to create a spectrogram yourself. This step helps the AI tool analyze the sound more effectively. -

Upload and Process

Upload your audio file or spectrogram to the AI tool. Follow the tool's instructions to initiate the image generation process. The AI will analyze the audio and create a visual output based on its interpretation. -

Review and Refine

Examine the generated image. If the result doesn't meet your expectations, adjust the audio input or experiment with different settings in the tool. Some tools allow you to tweak parameters to refine the output. -

Save and Share

Once satisfied with the result, save the image. You can use it for creative projects, presentations, or personal enjoyment.

By following these steps, you can explore the exciting possibilities of audio-to-image generation. This process not only enhances creativity but also provides a unique way to visualize sound.

Audio Processing Workflow

The diagram below shows the typical workflow for processing audio into visual outputs:

flowchart TD

A[Record Audio] --> B[Clean Audio]

B --> C[Convert to Spectrogram]

C --> D{Quality Check}

D -->|Good Quality| E[Upload to AI Tool]

D -->|Poor Quality| B

E --> F[Generate Image]

F --> G{Review Results}

G -->|Satisfactory| H[Save & Share]

G -->|Needs Improvement| I[Adjust Settings]

I --> F

classDef process fill:#FF8000,stroke:#333,stroke-width:1px,color:white;

classDef decision fill:#42A5F5,stroke:#333,stroke-width:1px,color:white;

classDef endpoint fill:#66BB6A,stroke:#333,stroke-width:1px,color:white;

class A,B,C,E,F,I process;

class D,G decision;

class H endpoint;

Best AI Tools for Audio-to-Image Generation

Overview of Popular AI Image Generators

AI tools have transformed the creative process by enabling you to generate images from audio. Among the most popular options, DALLE2 and Midjourney stand out for their innovative capabilities. These tools specialize in converting text prompts into visually stunning images. While they primarily focus on text-to-image generation, their underlying technology demonstrates the potential for bridging different sensory inputs, including audio.

For beginners, Canva offers a user-friendly platform with AI-powered image generation features. It simplifies the process, making it accessible even if you have no prior experience with AI tools. Additionally, advanced AI models trained for audio-to-image synthesis can create high-resolution visuals directly from audio recordings. These tools analyze sound patterns and frequencies to produce images that reflect the essence of the audio input.

"Generative AI projects like DALLE2 and Midjourney showcase the versatility of AI in creating visuals from diverse inputs."

AI Tool Comparison

Comparison of popular AI tools based on key features:

Features and Capabilities of Text-to-Image AI Tools

Text-to-image AI tools have revolutionized how you can create visuals. These tools use advanced machine learning models to interpret descriptive text and generate corresponding images. Their features include:

- High-Resolution Outputs: Many tools produce images with exceptional clarity and detail, suitable for professional use.

- Creative Flexibility: You can experiment with various styles, from abstract art to realistic visuals.

- Ease of Use: Most platforms offer intuitive interfaces, allowing you to input text prompts and receive images within seconds.

Some tools also integrate audio processing capabilities. For instance, they may convert audio into descriptive text before generating an image. This approach bridges the gap between sound and visuals, enabling you to visualize audio in a creative and meaningful way. Tools like these are invaluable for artists, educators, and content creators seeking innovative ways to express ideas.

PageOn.ai: A Recommended AI Image Generator from Audio

If you're looking for an AI image generator from audio, PageOn.ai is a top recommendation. This tool specializes in transforming audio inputs into visually compelling images. It uses advanced algorithms to analyze sound frequencies, rhythms, and tones, ensuring that the generated visuals align closely with the original audio.

PageOn.ai stands out for its user-friendly design and robust features. It supports both real-time audio input and pre-recorded files, giving you flexibility in how you use it. The tool also allows customization, enabling you to adjust parameters and refine the output to match your vision. Whether you're working on an artistic project or exploring new educational tools, PageOn.ai provides a reliable and efficient solution.

By leveraging tools like PageOn.ai, you can unlock the full potential of audio-to-image technology. These tools not only enhance creativity but also open up new possibilities for innovation across various fields.

How to Use PageOn.ai for Audio-to-Image Generation

Step-by-Step Instructions

Using PageOn.ai to transform audio into images is straightforward. Follow these steps to get started:

-

Sign Up and Access the Tool

Visit the official website of PageOn.ai and create an account. Once registered, log in to access the platform's features. The interface is user-friendly, making it easy for beginners to navigate. -

Upload Your Audio File

Prepare your audio file for upload. Ensure the file is in a supported format, such as MP3 or WAV. Click the "Upload" button on the dashboard and select your audio file. For real-time audio input, connect your microphone and follow the prompts. -

Adjust Settings and Preferences

After uploading, you can customize the settings. Choose the style or theme for the generated image. Options may include abstract art, realistic visuals, or spectrogram-based designs. Adjust parameters like color schemes or resolution to match your creative vision. -

Initiate the Generation Process

Click the "Generate" button to start the process. The AI will analyze the audio, focusing on elements like frequency, rhythm, and tone. Within moments, the tool will produce a visual representation of your audio. -

Review and Refine the Output

Examine the generated image. If it doesn't meet your expectations, use the editing options provided by PageOn.ai. You can tweak the settings or re-upload a modified audio file for better results. -

Download and Share Your Image

Once satisfied, download the final image. Use it for personal projects, presentations, or creative endeavors. Sharing your work on social media or with peers can inspire others to explore this innovative technology.

By following these steps, you can efficiently create stunning visuals from audio using PageOn.ai.

PageOn.ai Workflow

The diagram below illustrates the PageOn.ai audio-to-image generation process:

flowchart LR

A[Sign Up/Login] --> B[Upload Audio]

B --> C[Customize Settings]

C --> D[Generate Image]

D --> E{Review Output}

E -->|Satisfied| F[Download & Share]

E -->|Not Satisfied| G[Refine Settings]

G --> D

classDef orange fill:#FF8000,stroke:#333,stroke-width:1px,color:white;

classDef blue fill:#42A5F5,stroke:#333,stroke-width:1px,color:white;

classDef green fill:#66BB6A,stroke:#333,stroke-width:1px,color:white;

class A,B,C orange;

class D,G blue;

class E blue;

class F green;

Tips for Optimizing Your Results

To achieve the best outcomes with PageOn.ai, consider these practical tips:

-

Use High-Quality Audio

Clear and well-recorded audio files yield better visual outputs. Avoid background noise or distortion, as these can affect the AI's interpretation. -

Experiment with Styles

Explore different visual styles offered by the tool. Trying various themes can help you discover unique representations that align with your creative goals. -

Focus on Specific Audio Segments

Trim your audio file to highlight the most impactful sections. Shorter clips with distinct tones or rhythms often produce more meaningful visuals. -

Leverage Real-Time Input

For dynamic projects, use the real-time audio input feature. This allows you to experiment with live sounds and instantly see the results. -

Combine Outputs for Complex Projects

Generate multiple images from different audio clips. Combining these visuals can create a cohesive and intricate design for larger projects. -

Stay Updated on Features

Regularly check for updates or new features on PageOn.ai. The platform continuously evolves, offering enhanced tools and capabilities for users.

By applying these tips, you can maximize the potential of PageOn.ai and create visuals that truly capture the essence of your audio.

Real-World Applications of Audio-to-Image Technology

Applications in the Arts

Audio-to-image technology has unlocked new creative avenues in the arts. You can use this innovation to create interactive visual stories that respond to soundscapes or spoken words. For instance, artists now design immersive installations where visuals shift dynamically based on live audio inputs. This approach transforms traditional art into an engaging, multi-sensory experience.

Museums and galleries have also embraced this technology. A research project at the University of Texas at Austin demonstrated how audio could enhance visual displays. By converting sound into visuals, exhibitions become more interactive and captivating for visitors. Imagine walking through a gallery where the artwork changes based on the ambient sounds or your voice. This fusion of sound and visuals redefines how you experience art.

"Audio-to-image tools allow artists to visualize sound in ways that were once unimaginable, creating a bridge between auditory and visual creativity."

Applications in Education

In education, audio-to-image technology offers innovative ways to engage learners. Teachers can use it to transform complex audio concepts into visual aids, making lessons more accessible and easier to understand. For example, spectrograms generated from audio files help students grasp the structure of sound waves. This visual representation simplifies abstract ideas, enabling better comprehension.

Interactive learning environments also benefit from this technology. By integrating audio-to-image tools, educators can create dynamic presentations that respond to students' voices or classroom sounds. This approach fosters active participation and keeps learners engaged. Additionally, museums and science centers use these tools to visualize sound fields, offering visitors a chance to "see" sound rather than just hear it. Such experiences make learning both fun and memorable.

Educational Impact of Audio-Visual Integration

Study results showing improvement in student comprehension when using audio-to-visual tools:

Applications in Entertainment

The entertainment industry has embraced audio-to-image technology to enhance storytelling and audience engagement. Filmmakers and game developers use it to create visuals that sync perfectly with soundtracks or dialogue. This synchronization adds depth to narratives, making them more immersive for viewers and players.

Live performances also benefit from this innovation. Musicians can generate real-time visuals that respond to their music, creating a captivating experience for the audience. For example, optical sound field imaging, developed by NTT, allows performers to visualize sound fields using light. This technology transforms concerts into visually stunning events, where sound and light merge seamlessly.

"Audio-to-image tools are revolutionizing entertainment by blending sound and visuals, offering audiences a richer and more immersive experience."

From the arts to education and entertainment, audio-to-image technology continues to reshape how you interact with sound and visuals. Its applications are vast, and its potential is only beginning to unfold.

Benefits of Using AI for Audio-to-Image Generation

Creativity and Innovation

AI tools for audio-to-image generation unlock new dimensions of creativity. They allow you to visualize sound in ways that were once unimaginable. By analyzing audio patterns, these tools generate visuals that reflect the mood, tone, or rhythm of the sound. This capability inspires artists, musicians, and designers to explore fresh ideas and push creative boundaries.

For example, you can use AI-powered tools to transform a simple melody into a stunning visual masterpiece. These tools provide access to a wide range of creative elements, such as abstract designs, realistic imagery, or even dynamic animations. This variety enables you to experiment with different styles and discover unique artistic expressions.

"AI-powered tools enhance creativity by offering automated techniques and real-time recognition of sound elements."

In addition, AI image generation fosters innovation by bridging the gap between auditory and visual mediums. It encourages you to think beyond traditional formats and embrace multi-sensory experiences. Whether you're creating art, composing music, or designing educational materials, this technology empowers you to bring your vision to life in extraordinary ways.

Accessibility and Efficiency

AI tools make audio-to-image generation more accessible than ever before. You no longer need advanced technical skills or expensive software to create high-quality visuals. Many platforms offer user-friendly interfaces and step-by-step guides, making it easy for beginners to get started. This accessibility ensures that anyone, regardless of expertise, can explore the potential of AI image generation.

Efficiency is another significant advantage. AI automates complex processes, saving you time and effort. For instance, these tools can analyze audio files, identify key elements, and generate visuals within seconds. This speed allows you to focus on refining your creative ideas rather than spending hours on manual tasks.

Moreover, AI tools enhance efficiency through features like real-time instrument recognition and voice separation. These capabilities streamline workflows and improve the accuracy of the final output. By leveraging these tools, you can achieve professional results with minimal resources.

"AI tools provide automated solutions that improve accessibility and efficiency in creative projects."

The combination of accessibility and efficiency makes AI image generation a valuable resource for artists, educators, and content creators. It democratizes the creative process, enabling you to produce visually compelling content with ease.

AI tools like PageOn.ai empower you to transform audio into stunning images, bridging the gap between sound and visuals. This technology offers endless possibilities in fields such as art, education, and entertainment. You can use it to create immersive experiences, simplify complex concepts, or enhance storytelling. The ability to produce AI-generated images from sound opens new doors for creativity and innovation. By exploring tools like this AI art generator, you can achieve remarkable image generation results. Embrace this cutting-edge technology to unlock your creative potential and redefine how you visualize sound.

FAQ

What is audio-to-image transformation, and how does it work?

Audio-to-image transformation involves converting sound into visual representations. AI tools use advanced models like Large Language Models (LLMs) and diffusion models to analyze audio data. These models interpret sound frequencies, rhythms, and patterns to generate images that reflect the essence of the audio. For example, a melody can be transformed into an abstract visual or a spectrogram that represents the sound's structure.

"This process bridges the gap between auditory and visual mediums, offering a unique way to experience sound."

Can AI tools create visuals from live audio?

Yes, many AI tools support real-time audio input. These tools analyze live sounds, such as speech or music, and instantly generate corresponding visuals. This feature is particularly useful for live performances, where musicians or speakers can create dynamic visuals that respond to their audio in real time. It enhances audience engagement by combining sound and visuals seamlessly.

How can AI assist in music generation and audio processing?

AI plays a significant role in music generation and audio processing. It can help with tasks like creating samples and loops, automating mixing and mastering, and recognizing instruments in real time. Additionally, AI tools can separate voices or sources in audio files, making it easier to isolate specific elements. These capabilities streamline workflows and inspire creativity in music production.

What are spectrograms, and why are they important in this process?

A spectrogram is a visual representation of sound frequencies over time. It serves as a bridge between audio and visuals, helping AI tools interpret sound in a format suitable for image generation. Spectrograms allow AI to analyze the structure of audio, enabling the creation of visuals that align with the sound's tone, rhythm, or mood.

Are there any limitations to audio-to-image technology?

While audio-to-image technology is innovative, it has some limitations. The quality of the generated visuals depends on the input audio. Poor-quality recordings or background noise can affect the results. Additionally, the technology may struggle with highly complex or layered audio inputs. However, advancements in AI continue to improve these tools, making them more accurate and versatile.

Can this technology be used for educational purposes?

Absolutely. Audio-to-image tools offer unique opportunities in education. Teachers can use them to visualize sound concepts, such as waveforms or frequencies, making lessons more engaging. Museums and science centers also use this technology to create interactive exhibits, allowing visitors to "see" sound and understand its properties in a fun and memorable way.

Is audio-to-image technology suitable for artistic projects?

Yes, this technology is ideal for artistic projects. Artists can use it to create visuals that respond to soundscapes or spoken words, adding a dynamic element to their work. For instance, you can design immersive installations where visuals shift based on live audio inputs. This approach transforms traditional art into a multi-sensory experience.

How can I ensure the best results when using audio-to-image tools?

To achieve optimal results, use high-quality audio recordings. Clear sound without background noise helps AI tools interpret the input more accurately. Experiment with different styles and settings to find the best match for your creative vision. Additionally, focus on specific audio segments that have distinct tones or rhythms for more meaningful visuals.

What industries benefit the most from audio-to-image technology?

Several industries benefit from this technology, including arts, education, and entertainment. Artists use it to visualize sound creatively, while educators employ it to simplify complex concepts. In entertainment, filmmakers and game developers integrate it to enhance storytelling. Live performers also use it to create captivating visuals that sync with their music or dialogue.

You Might Also Like

Quick Tips for Transforming Converted Presentations into Polished Masterpieces

Discover expert tips for editing converted presentations, fixing formatting issues, restoring visual elements, and creating cohesive slides with PageOn.ai's intelligent tools.

Mastering PowerPoint File Conversions: Preserve Formatting While Editing | PageOn.ai

Learn expert strategies to edit converted PowerPoint files without losing formatting. Discover pre-conversion preparation, formatting fixes, and how PageOn.ai helps maintain visual integrity.

Revolutionizing Document Conversion: Drag-and-Drop Solutions for Workflow Efficiency

Discover how drag-and-drop document conversion solutions transform workflow efficiency, reduce errors, and save 60%+ time. Learn implementation strategies and future trends.

From Prompt to Presentation in Under 60 Seconds: Transform Your Ideas Instantly

Discover how to create impactful presentations in under 60 seconds using PageOn.ai. Learn techniques for visual storytelling, rapid content creation, and audience engagement.